Abstract

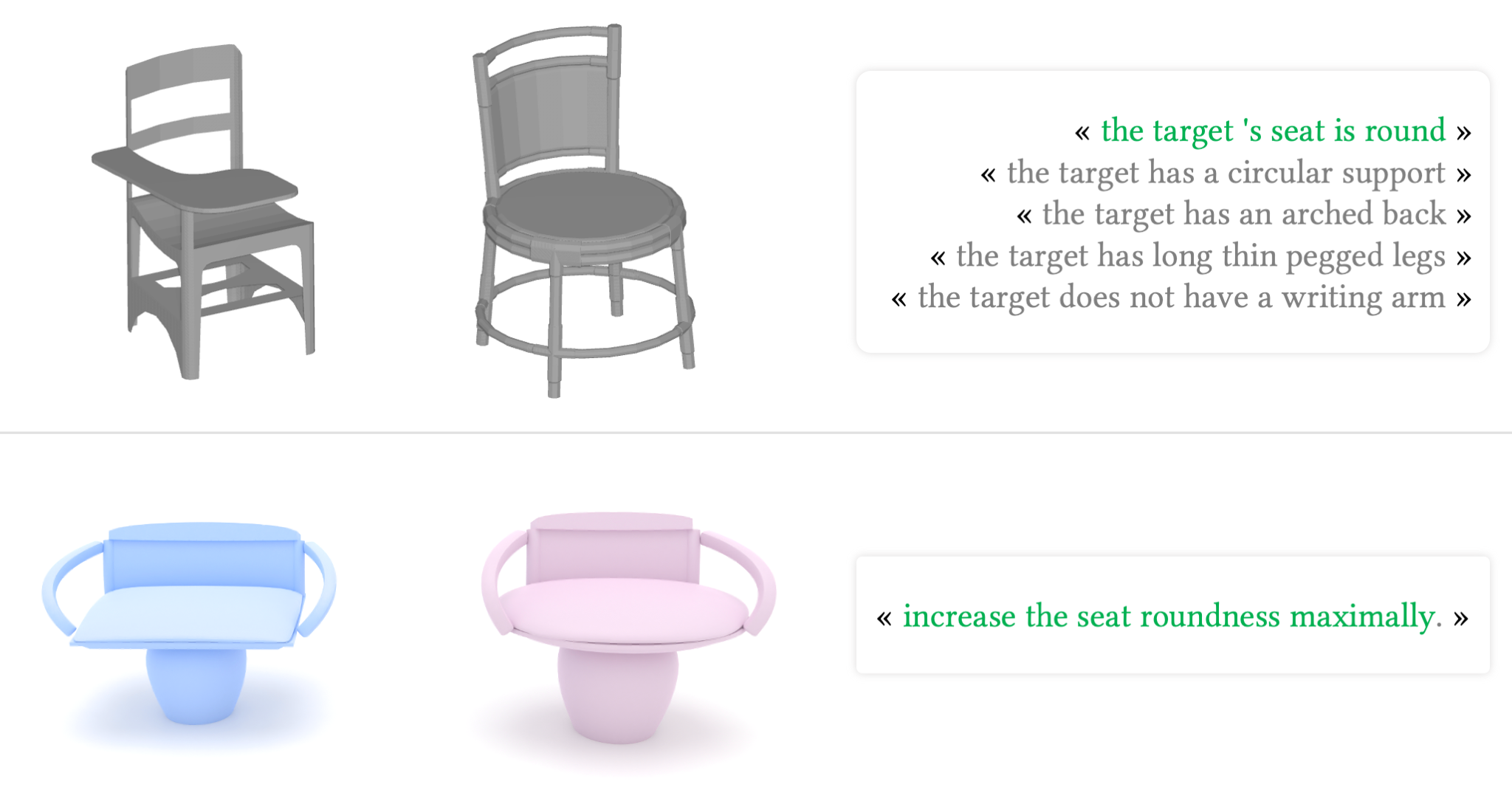

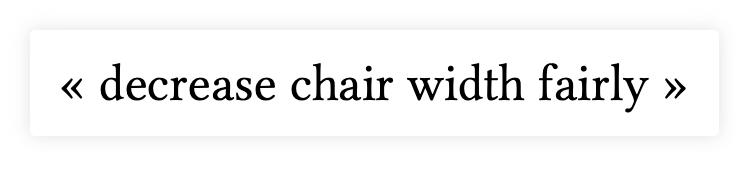

We introduce ShapeWalk, a carefully curated dataset designed to advance the field of language-guided compositional shape editing. The dataset consists of 158K unique shapes connected through 26K edit chains, with an average length of 14 chained shapes. Each consecutive pair of shapes is associated with precise language instructions describing the applied edits. We synthesize edit chains by reconstructing and interpolating shapes sampled from a realistic CAD-designed 3D dataset in the parameter space of a shape program. We leverage rule-based methods and language models to generate natural language prompts corresponding to each edit. To illustrate the practicality of our contribution, we train neural editor modules in the latent space of shape autoencoders, and demonstrate the ability of our dataset to enable a variety of language-guided shape edits. Finally, we introduce multi-step editing metrics to benchmark the capacity of our models to perform recursive shape edits. We hope that our work will enable further study of compositional language-guided shape editing, and finds application in 3D CAD design and interactive modeling.